From Scouting to Scale: How Innovation Teams Evaluate Emerging Technologies in 2026

Innovation teams have never had more access to ideas, startups, and emerging technologies.

They’ve also never had a harder time answering the questions that actually matter:

- What’s real vs. noise?

- What’s ready vs. interesting?

- What’s worth backing vs. worth watching?

- What should we stop—before we waste a quarter?

In 2026, the challenge isn’t “finding innovation.”

It’s making consistent, defensible decisions at scale—across ideas, vendors, and pilots—without slowing the organization down.

If you’re an Innovation Manager, Open Innovation Lead, or CIO, you already know the pattern: the pipeline fills quickly, but decisions lag. Pilots start, momentum fades, and outcomes get murky. Everyone is busy. Few things make it to production.

This post is a practical, thought-provoking framework for how high-performing teams evaluate emerging technologies in 2026—and why the best innovation programs are shifting from idea management to decision management.

The uncomfortable truth: most innovation programs don’t fail at innovation

They fail at evaluation

Plenty of organizations can generate ideas, run challenges, or meet startups.

What breaks is the ability to evaluate consistently when volume rises.

The symptoms are familiar:

- Too many inputs, not enough decisions

- Evaluation criteria that change depending on the room

- Pilots that start fast but stall without a clear “so what”

- Stakeholder alignment that arrives late—after time and credibility are already spent

- Great narratives, weak evidence

This isn’t a motivation problem.

It’s a system problem.

And the system breaks because most evaluation processes are built on one assumption that no longer holds:

That expert judgment, informal reviews, and “we’ll know it when we see it” can scale.

They can’t—not in 2026.

Why evaluation is harder in 2026 than it was in 2020

(even if your tools are “better”)

Emerging technologies have changed the shape of the decision:

1) The signal-to-noise ratio is worse

AI has lowered the cost of producing “credible-looking” vendors, decks, demos, and prototypes. More solutions look polished sooner. That doesn’t mean they’re enterprise-ready.

2) The time-to-pilot expectation is shorter

Business stakeholders have less patience for long evaluation cycles. If you can’t get to a pilot quickly, someone else will.

3) The cost of a wrong pilot is higher

It’s not just budget. A failed pilot burns stakeholder trust and crowds out bandwidth for better bets.

4) The number of decisions has exploded

A single category can include dozens of vendors, multiple architectural approaches, and fast-moving capability shifts. Evaluation is no longer “a decision.” It’s a continuous decision process.

If you’re feeling pressure to move faster while staying rigorous, you’re not imagining it. That’s the job now.

The shift that separates high-performing teams: from idea volume to decision quality

Many organizations still measure innovation by activity:

- number of ideas submitted

- number of startups reviewed

- number of pilots launched

- number of workshops run

Those aren’t useless—but they’re incomplete.

High-performing teams increasingly measure innovation by:

- time from scouting to decision

- percentage of pilots with defined success criteria

- decision consistency across evaluators

- percentage of pilots that lead to a clear outcome (scale / stop / watch)

- repeatability of the evaluation process

Activity can be outsourced.

Decision quality can’t.

And decision quality is what earns innovation teams credibility.

A simple mental model: the innovation pipeline isn’t a funnel

It’s a series of decisions

Most pipelines are treated like “a funnel” where stuff moves forward if it doesn’t get rejected.

That framing causes two problems:

- Teams optimize for throughput, not quality.

- “Not rejected” becomes the same as “approved.”

A better framing:

Your pipeline is a sequence of decision points, each one designed to reduce uncertainty.

That’s how you prevent pilots from becoming a default next step.

The 2026 evaluation stack

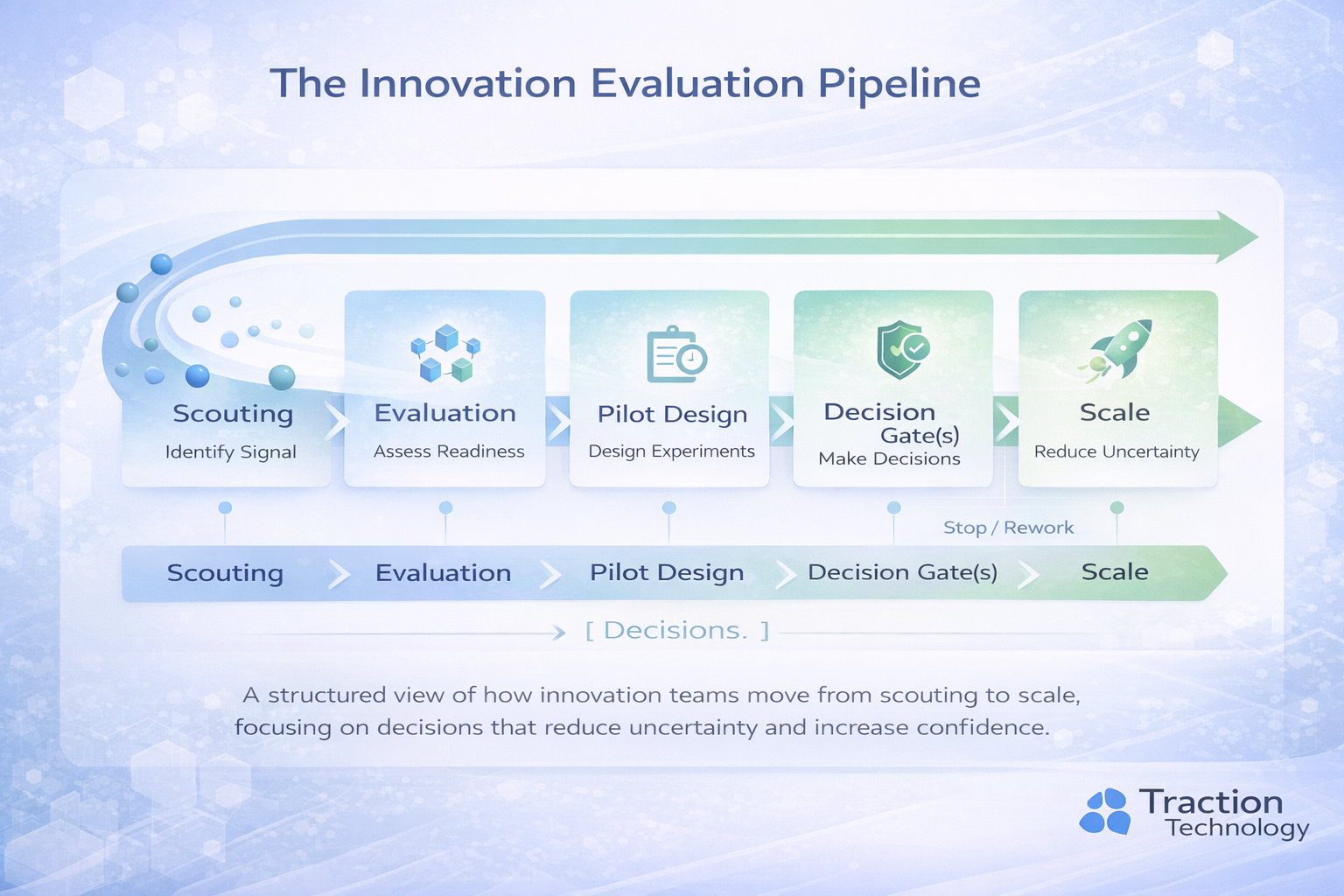

Visualizing the Innovation Evaluation Pipeline:

At a high level, innovation evaluation is not a funnel — it’s a sequence of decisions designed to reduce uncertainty at each stage. The illustration below shows how leading teams move from broad technology scouting to focused pilots and, ultimately, production decisions.

The three layers innovation teams need

Think of evaluation as three distinct layers. Mixing them is what causes confusion.

Layer 1: Relevance (Is this worth attention?)

This is where scouting happens. The goal isn’t certainty. It’s triage.

You’re answering:

- Is the problem meaningful to the business?

- Is this technology direction credible?

- Is there a plausible use case in our environment?

- Is this differentiated or commodity?

- Is the vendor real enough to be worth time?

Layer 2: Readiness (Is this ready for a pilot?)

This is where teams often stumble. They move straight from “interesting” to “pilot” without testing assumptions.

You’re answering:

- What would we need for a pilot to be valid?

- Do we have the data, systems, and owners?

- What are the security / compliance constraints?

- What will success look like?

- What would stop the pilot early?

Layer 3: Scalability (Is this ready for production?)

Most pilots fail here—because scalability questions were never asked early.

You’re answering:

- Can this integrate into operating workflows?

- Who owns it post-pilot?

- What changes are required (process, people, governance)?

- What is the long-term cost and operational burden?

- What measurable business outcome will this support?

If your evaluation process doesn’t explicitly separate these layers, you’ll confuse “interesting,” “pilot-ready,” and “enterprise-ready”—and you’ll pay for it later. At the end of a good evaluation cycle, innovation teams don’t just have opinions — they have a structured output that supports decisions.

It doesn’t need to be complex. It needs to be consistent.

The evaluation questions that matter (and why most teams avoid them)

Many teams rely on “scoring.” Scoring can help—but only if the underlying questions are good.

Here are the questions that separate thoughtful evaluation from performative evaluation.

1) What decision are we trying to make—right now?

Not “Is this good?”

But: Do we advance to deeper evaluation, pilot, or stop?

If you can’t state the decision, you can’t design the evaluation.

2) What is the smallest test that reduces meaningful uncertainty?

Great teams don’t run big pilots. They run small tests with clear learning goals.

Ask:

- What uncertainty would kill this later?

- How can we test that quickly?

3) What would make this fail even if the technology works?

This is the most underused question in innovation.

Common answers:

- no process owner

- no integration path

- security blockers

- adoption friction

- unclear economic model

- unclear operating model

If you don’t test these early, your pilot will “succeed” technically and still die.

4) What is the baseline—and how will we measure improvement?

Innovation teams lose credibility when success is vague.

If you don’t define the baseline, your pilot result becomes a story, not evidence.

Why pilots stall: the “pilot trap” innovation teams need to escape

Pilots stall because they’re treated as a default next step rather than a designed experiment.

The pilot trap looks like this:

- “We should run a pilot.”

- Pilot launches without agreed success criteria.

- Stakeholders interpret results differently.

- No one owns the next step.

- The pilot becomes “ongoing.”

- The organization learns the wrong lesson: innovation is slow.

A better approach is to treat pilots like a gated decision process.

Decision gates: the underrated tool that makes innovation faster

People assume decision gates slow teams down.

In reality, decision gates speed things up by preventing ambiguity.

A good gate does three things:

- Defines the decision clearly (advance / stop / watch)

- Defines what evidence is required

- Defines who is accountable

Decision gates reduce the number of meetings because they reduce debate.

A practical 2026 workflow: how leading teams evaluate emerging technologies

This is a high-signal workflow many innovation teams are moving toward:

Step 1: Scouting intake (triage)

- categorize the technology

- capture the use case

- identify who cares internally

- decide if it’s worth deeper evaluation

Step 2: Structured evaluation (readiness)

- apply repeatable criteria

- identify key risks early

- confirm data / integration feasibility

- define pilot success criteria

Step 3: Pilot design (not just pilot launch)

- define baseline and metrics

- define timeline and owners

- define stop conditions

- define what “scale” would require

Step 4: Pilot execution with governance

- check-in points tied to metrics

- ensure adoption and ownership are addressed

- prevent “pilot drift”

Step 5: Scale decision

- production readiness assessment

- operating model

- ownership and budget alignment

- go/no-go with rationale captured

If you’re missing any of these, your pipeline will create activity without outcomes.

Where AI fits—and where it doesn’t

AI is useful in evaluation when it reduces manual effort and increases consistency.

AI can help with:

- summarizing vendor information

- tagging technologies and use cases

- normalizing inputs across sources

- supporting consistent scoring and prioritization

- surfacing patterns across the portfolio

AI does not replace:

- governance

- ownership

- baseline definition

- operational integration

- decision accountability

The teams that win with AI are the ones that embed it inside workflows, not the ones that bolt it on.

A thought-provoking challenge for 2026

Ask yourself this about your innovation program:

If your CEO asked, “Why did we choose this pilot over the other 30 options?”

Could you answer with a defensible, repeatable rationale?

If the answer is no, your program doesn’t have an innovation problem.

It has an evaluation system problem.

And the fix isn’t more ideas.

It’s better decisions.

The 2026 goal: make technology readiness measurable

Innovation teams are increasingly expected to treat readiness as a discipline—not an opinion.

That means building a practice around:

- enterprise readiness signals

- structured evaluation criteria

- repeatable decision gates

- consistent documentation of rationale

- portfolio-level visibility into what’s being evaluated and why

When readiness becomes measurable, innovation becomes scalable.

Final takeaway

Assessing and evaluating emerging technologies is a necessary part of innovation, but it’s only one piece of the larger system. To turn evaluation insights into consistent decisions and measurable outcomes, it helps to think in terms of the full innovation lifecycle. We captured that broader approach in the Traction Innovation Framework, a practical guide for managing innovation from discovery through scale.

👉 Explore the Innovation Framework here →

In 2026, the best innovation teams will not be defined by how many ideas they collect.

They’ll be defined by how consistently they can:

- separate signal from noise

- design pilots that produce evidence

- make clear scale/stop decisions

- build organizational confidence in the process

Innovation doesn’t scale because you discover more.

It scales because you decide better.

Traction Technology Supporting Technology Scouting in 2026

Recognized by Gartner as a leading Innovation Management Platform, Traction Technology helps drive digital transformation by streamlining the discovery and management of new technologies and emerging startups. Our platform, built for the needs of Fortune 500 companies, helps you save time, reduce risk, and accelerate your path to innovation.

Key Features & Benefits:

With our platform, innovation teams can:

- 🔍 Scout and evaluate emerging technologies in minutes

- 📊 Access AI-powered insights to make data-driven decisions

- 🤝 Collaborate seamlessly across teams and business units

- 🚀 Accelerate pilots and scale solutions that drive real business impact

👉 New: Experience Traction AI Scouting and Trend Reports with a Free Test Drive — no scheduling a demo required.

Try Traction AI for Free →

Or, if you prefer a guided experience:

Book a Personalized Demo →

Related Resources

Enterprise Use Cases Using AI Technologies

Smarter Innovation Management with AI

Top Ten Best Practices for Effective Innovation Management

Traction Technology Innovation Team Resource HUB

Awards and Industry Recognition

Recognized by Gartner as a leading Innovation Management Platform

"By accelerating technology discovery and evaluation, Traction Technology delivers a faster time-to-innovation and supports revenue-generating digital transformation initiatives."

.webp)